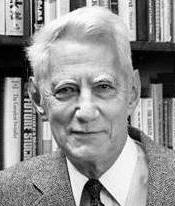

Claude Shannon

1916-2001

|

Shannon was an

American

electronic engineer and

mathematician. He is known as

"the father of

information theory"

for having founded this field with one landmark paper published in

1948.

Information theory is a branch of applied mathematics and electrical engineering involving the quantification of information. Historically, information theory was developed to find fundamental limits on compressing and reliably communicating data.

A

key measure of information in the theory is known as

entropy, which is usually

expressed by the average number of bits needed for storage or

communication. Intuitively, entropy quantifies the uncertainty

involved when encountering a

random variable. For example,

a fair coin flip (2 equally likely outcomes) will have less entropy

than a roll of a die (6 equally likely outcomes).

He is also credited with founding both digital computer and digital circuit design theory in 1937, when, as a 21-year-old master's student at MIT, he wrote a thesis demonstrating that electrical application of Boolean algebra could construct and resolve any logical, numerical relationship.

It has

been claimed that this was the most important master's thesis of all

time.

|